Apache Spark Has Which of the Following Capabilities Monitoring

Prerequisites For information about the supported versions of the job plug-ins generate a dynamic Data Integration report from the IBM Software Product Compatibility Reports web site and select the Supported Software tab. Features detect and.

An Overview of Apache Spark.

. A list of scheduler stages and tasks. It will give you visibility over the apps running on your clusters with essential metrics to troubleshoot their performance like memory usage CPU. We werent interested in that approach so to enable Spark monitoring via Prometheus a couple of changes had to be made in the Spark Code base.

Spark runs on Hadoop Mesos standalone or in the cloud. The user interface on Apache Spark comes with a basic utility dashboard. Spark already has its own monitoring capabilities including a very nice web UI and a REST API.

So its not surprising the usage of Spark is booming as this Google Trends graph shows. Apache spark has which of the following capabilities. Every SparkContext launches a web UI by default on port 4040 that displays useful information about the application.

All Programming paradigm used in Spark Generalized Which. If the Apache Spark application is still running you can monitor the progress. To view the details.

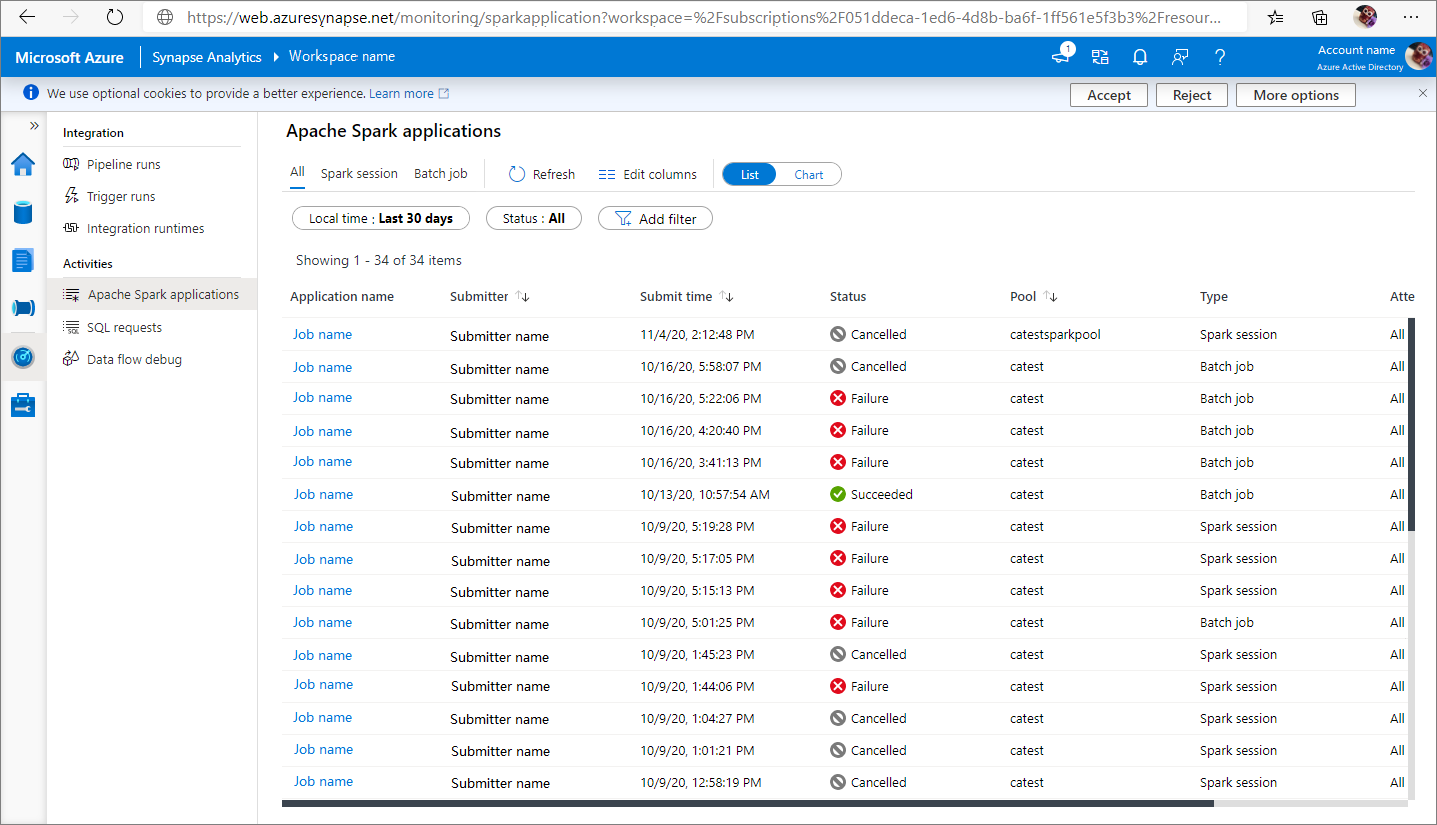

There are a number of additional libraries that work with Spark Core to enable SQL streaming and machine learning applications. Swaroop Ramachandra presents the first part of his series on monitoring in Apache Spark and the need for a monitoring program at three levels. You can view all Apache Spark applications from Monitor- Apache Spark applications.

Apache Spark has following features. Helpdice reserves the right to monitor all Comments and to remove any Comments which can be considered inappropriate offensive or causes breach of these Terms and Conditions. Big data solutions are designed to handle data that is too large or complex for traditional databases.

Number of activefailedcompleted tasks tasks maxaveragemin duration. Apache Spark has an advanced DAG execution engine that supports acyclic data flow and in-memory computing. Web UIs metrics and external instrumentation.

To view the details about the Apache Spark applications that are running select the submitting Apache Spark application and view the details. It can access diverse data sources including HDFS Cassandra HBase and S3. Total shuffle readwrite bytes.

The Kubernetes Dashboard is an open-source general-purpose web-based monitoring UI for Kubernetes. Open Monitor then select Apache Spark applications. Of the following modes.

In this case we need to monitor our Spark application. Collect Apache Spark application metrics with the Prometheus or REST APIs Collect Apache Spark application metrics with the Prometheus API. Monitor pod resource usage using the Kubernetes Dashboard.

View Spark Preliminariesdocx from COMPUTER SCIENCE MISC at Delhi Public School - Durg. The main features of spark are. It helps us to run programs relatively quicker than Hadoop ie a hundred times quicker in memory and ten times quicker even on the disk.

Finding no evidence of how to do that using Prometheus online shouting a little bit on Twitter talking with some guys in the IRC channel I decided to write this post walk-through guide. Monitoring Apache Spark -. Monitoring your Spark applications on Kubernetes.

Spark processes large amounts of data in memory which is much faster than disk. Check the Completed tasks Status and Total duration. Apache Spark is an open-source parallel processing framework that supports in-memory processing to boost the performance of applications that analyze big data.

Then we will explore different techniques and tools to help you boost the performance and efficiency of your Spark applications. Speed Supports multiple languages Advanced Analytics. View Apache Spark applications.

Open Monitor then select Apache Spark applications. View completed Apache Spark application. Some of the important metrics to monitor in Apache Spark are.

No one can monitor any process on Apache Spark without proper knowledge of the. Monitoring Apache Spark can be tough even for the experts. But this simple dashboard is simply not enough to run a production ready setup for the purpose of monitoring the data processing system.

There are several ways to monitor Spark applications. The Spark Core is responsible for memory management task monitoring fault tolerance storage system interactions job scheduling and support for all basic IO activities. In this article.

Number of Cores CPU time memory used max memory allocated disk used. Apache Spark is an open-source large-scale data processing engine built on top of the Hadoop Distributed File System HDFS and enables applications in Hadoop clusters to run up to 100x faster in memory and 10x faster even when running on disk. Spark is an additional general and quicker processing platform.

Apache Spark jobs define schedule monitor and control the execution of Apache Spark processes. Spark already has its own monitoring capabilities including a very nice web UI and a REST API. Get latest metrics of the specified Apache Spark application by.

This article explains how to monitor your Apache Spark applications allowing you to keep an eye on the latest status issues and progress. The most vital feature of Apache Spark is its in-memory cluster computing that extends the speed of the data process. April 1 2021.

Apache Spark is an open-source engine for in-memory processing of big data at large-scale. Number of activecompletedfailed jobs. However we need to find a way to scrape it into Prometheus DB where our monitoring metrics are collected and analyzed.

Spark only supports a handful of sinks out-of-the-box Graphite CSV Ganglia and Prometheus isnt one of them so we introduced a new Prometheus sink of our own PR - with related Apache. It provides high-performance capabilities for processing workloads of both batch and streaming data. To get list of Apache Spark applications for a Synapse workspace you can follow this document Monitoring - Get Apache Spark Job List.

Apache Ignite Introduction Gridgain Systems Learning Framework Deep Learning Cloud Infrastructure

Operationalizing Machine Learning At Scale With Databricks And Accenture Machine Learning Writing Rubric Machine Learning Applications

Apache Spark Monitoring In Spm Sematext

Monitor Apache Spark Applications Using Synapse Studio Azure Synapse Analytics Microsoft Docs

Custom Matlab Inputformat For Apache Spark Apache Spark Apache Data Mining

Apache Spark Monitoring In Spm Sematext

How To Monitor Apache Spark Pools In Synapse Studio Azure Synapse Analytics Microsoft Docs

Monitor Apache Spark Applications Using Synapse Studio Azure Synapse Analytics Microsoft Docs

No comments for "Apache Spark Has Which of the Following Capabilities Monitoring"

Post a Comment